This transcript has been edited for clarity.

Welcome to Impact Factor, your weekly dose of commentary on a new medical study. I'm Dr F. Perry Wilson of the Yale School of Medicine.

I want you to listen to this audio file and see if you can recognize it.

[Click on the video above to hear the audio clip.]

Take a second. Maybe play it back again. Does it ring a bell?

Some of you, particularly of my generation, may recognize the characteristic rhythm guitar and harmony of Pink Floyd's "Another Brick in the Wall." But you haven't heard it like this before. No one has heard any song like this before because this is the first song reconstructed from the electrical signals in a patient's brain.

The human brain is the most complex object in the known universe. It is the most fascinating and the most mysterious. The entire variety of human experience, all our emotions, our perceptions, our sensations are housed in those 1300 grams of interconnected tissue.

And the perception of music employs so many of the brain's faculties because music is more than a fancy form of speech. There is rhythm, harmony, tone, and timbre — all things we can consciously and even unconsciously perceive. How does that happen?

To investigate the way the brain processes music, researchers, led by Ludovic Bellier at UC Berkley, examined 29 patients undergoing neurosurgery. Their results appear in a new paper in PLOS Biology.

The patients, who were conscious during the operation, had about 300 electrodes placed directly on the surface of the brain. And then they listened to that all-time classic of rock opera, "Another Brick in the Wall (Part 1)."

The fact that researchers could reconstruct the song from the electrodes is clearly the coolest part of the study, but it wasn't really its purpose. Rather, it was to identify the regions of the cortex of the brain that process music and the components of music.

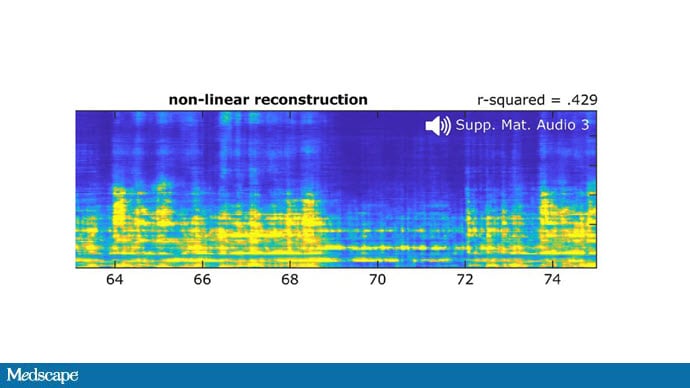

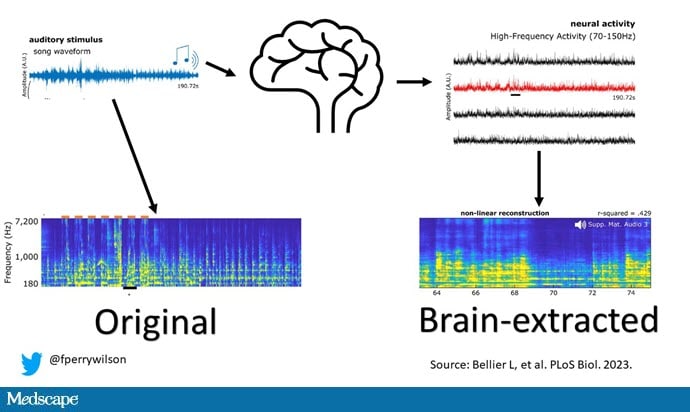

The general approach was to transform the original Pink Floyd audio clip into an auditory spectrogram that looks like this.

It's basically a mathematical transformation of the original song and at somewhat lower fidelity. This is what the spectrogram sounds like transformed back to audio.

[Click on the video above to hear the audio clip.]

The researchers then took the signals from those 300-plus electrodes, and through a combination of machine-learning techniques they developed a model that would generate a spectrogram from the electrical signals. That spectrogram could then be translated back to audio, and you get, well, you get this:

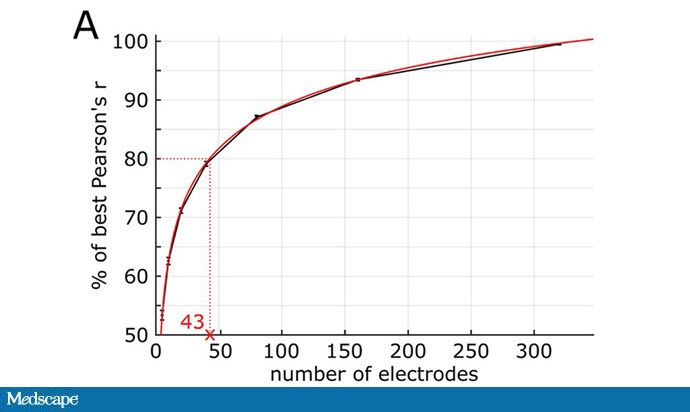

That provides some proof of concept. With granular-enough data on brain electrical signals, you can recapitulate something recognizable. Presumably, with more electrodes, more granularity, you could produce an even more recognizable song.

In fact, the researchers quantify the similarity of the output vs the number of electrodes here. It's a standard logarithmic relationship. There are clearly diminishing returns, but studies like this make me realize that the possibility of, quite literally, reading thoughts is no longer pure science fiction.

The team could also identify which electrodes were contributing the most to the model. You can see, as you might expect, a lot of contribution from electrodes in the superior temporal gyrus, though I should note that there were no electrodes on the nearby primary auditory cortex — a limitation of this study.

They also found that electrodes on the right side of the brain contribute more to the model than those on the left. Digging into this a bit, it turns out that music is encoded bilaterally, but the information in the left hemisphere is redundant with that on the right. The right encodes extra stuff that isn't present on the left. I'm probably reading too much into this, but part of me thinks this might reflect the emotional response to music that is unique compared with ordinary speech.

While this work is incredibly impressive, it's still early. One can imagine some clinical applications — for those with certain types of hearing loss, maybe — but that's not what I find so intriguing about this type of research. It's the probing of the brain and not just the rather blunt neuroanatomy that tells us that this part feels pain and this part moves a given muscle, but the deeper function — functions like our ability to appreciate music, functions that separate us from other life forms on this planet. Functions that are human. There has been a wall of ignorance in our understanding of the brain. We are now dismantling it, brick by brick.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and director of Yale's Clinical and Translational Research Accelerator. His science communication work can be found in the Huffington Post, on NPR, and here on Medscape. He tweets @fperrywilson and his new book, How Medicine Works and When It Doesn't, is available now.

Follow Medscape on Facebook, X (formerly known as Twitter), Instagram, and YouTube

"sound" - Google News

August 16, 2023 at 01:00AM

https://ift.tt/i9EfCBS

The Sound of Music Directly From Your Brain - Medscape

"sound" - Google News

https://ift.tt/l2jZozQ

Shoes Man Tutorial

Pos News Update

Meme Update

Korean Entertainment News

Japan News Update

No comments:

Post a Comment